Notifications Logical Architecture #

Logical Deployment Diagram #

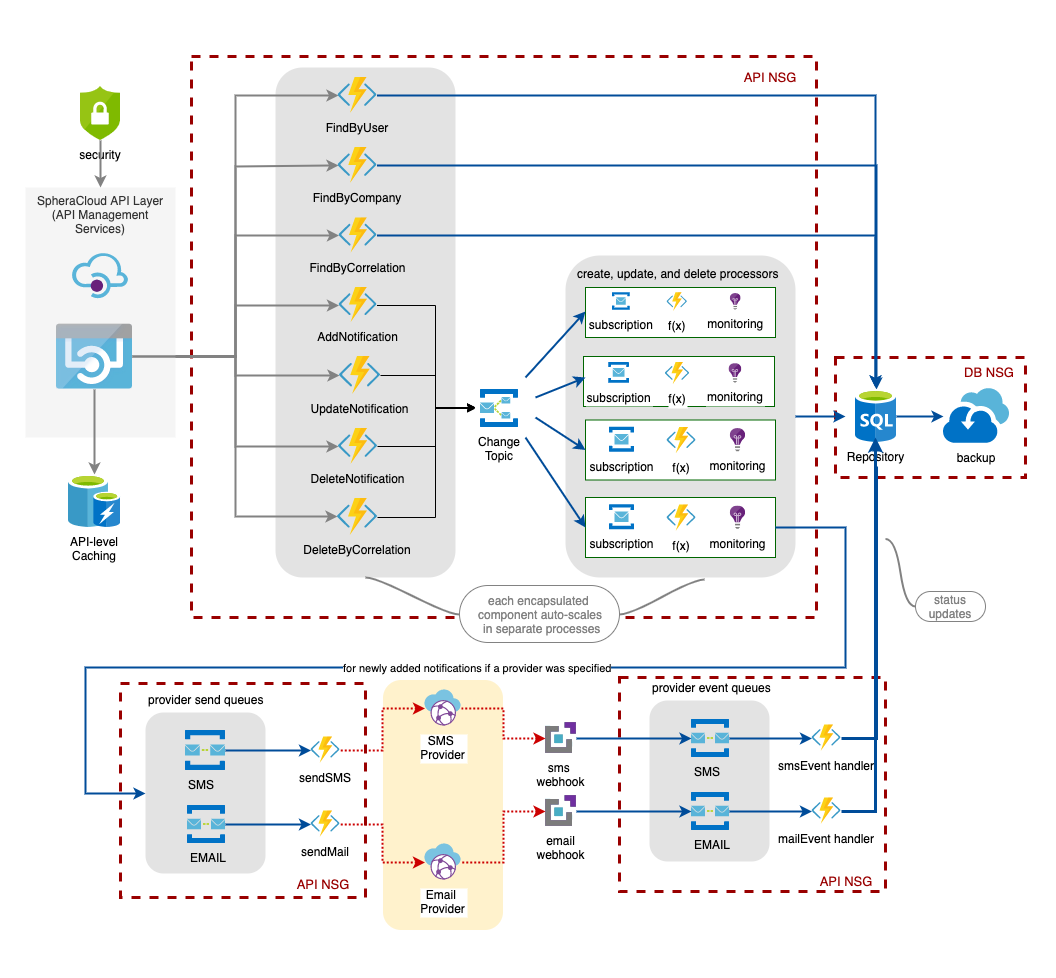

API Gateway (SpheraCloud API Layer) and Read (GET) Caching #

All interactions with the Notifications Service can only be done through the API Gateway (Azure API Management Services). This provides several features of special interest from a PaaS perspective. First, it ensures that all callers are authorized (security) while providing a single consistent proxy interface for the API regardless of where the individual components may be hosted. Additionally, the API Gateway offers the advantage of having a built-in integration with Azure Caching for Redis to enable caching of results for reuse rather than re-querying the database for every operation. Based on usage typical usage patterns identified industry-wide for similar functionality, a single fetch with caching will typically prevent an average of 20 additional calls for the same data throughout the lifetime of a session.

Using a gateway helps to decouple the front-end application from the back-end APIs. For example, API Management can rewrite URLs, transform requests before they reach the back end, set request or response headers, and so forth.

API Management can also be used to implement cross-cutting concerns such as:

- Enforcing usage quotas and rate limits

- Validating OAuth tokens for authentication

- Enabling cross-origin requests (CORS)

- Caching responses

- Monitoring and logging requests

If you don’t need all of the functionality provided by API Management, another option is to use Functions Proxies. This feature of Azure Functions lets you define a single API surface for multiple function apps, by creating routes to back-end functions. Function proxies can also perform limited transformations on the HTTP request and response. However, they don’t provide the same rich policy-based capabilities of API Management.

All calls are done securely via only restful https-exposed endpoints.

Notifications Read-Only functionality #

The various read-only API methods directly map to massively scalable Azure functions which validate the requests, and then interact directly with the repository (data store) to retrieve matching records (notifications or messages). All read methods support full paging functionality to minimize database impact and ensure smooth performance and a lower network traffic footprint. If no paging parameters are manually specified, the system will default to 20 items per page.

These functions were selected to interact directly with the repository and none of the others specifically because these need priority access for users to retrieve and review their notifications across all SpheraCloud applications. It is assumed that the load on these three endpoints will comprise the most traffic by at least an order of magnitude over all other methods combined. Additionally, from a users perspective, this is appears to be a synchronous operation in fetching results and needs to be very performance in fetching results.

See Operation documents for more details.

Notifications Create, Update, and Delete functionality #

All writeable API methods directly map to massively scalable Azure functions which validate the requests and then publish the requests to a Notifications Change Topic (with a label specific to their operation) before then quickly returning an acknowledgement to the caller. This approach allows the interactions with these components of the architecture to allow for extremely quick operations with minimal impact on callers.

It is possible to expose this private Topic to allow for applications to place their requests directly on it allowing for a true “fire-and-forget” process for notification changes - this functionality will not be implemented unless we identify a specific use-case where the latency of making the https request is measurably impacting caller performance.

A separate set of Azure functions (one for each operation) subscribe to the Notifications Change Topic based on the message label (operation). This fan out approach for a topic with multiple subscribers is useful as we do not have to:

- create manage a large collection of queues

- provides a single dead-letter location for tracking issues

- provides a shared rule location for message retries and poison message details

- provides a single set of access requirements (security) rather than managing (and potentially exposing) multiple keys

- guaranteed delivery to all subscribers while allowing filtering of the messages during a fan out

The subscription processors are responsible for actually processing all non-read (create, update, and deletion) requests for the service. Each one spins up dynamically based on incoming subscription messages assigned to them. If the number of messages pending on any subscription rises beyond pre-defined levels, additional copies of the specific azure function are created to handle the increased load.

The processor functions read the incoming message from the subscription and validate the payload and converts it into an appropriate DTO (Data Transfer Object) that the database can understand. The function then calls an appropriate stored procedure to perform the desired action - returning either a boolean indicating success or failure or an identity key as appropriate for the type of operation. Because of the asynchronous processing pipelines, any result must be handled within the function rather than by a user interaction. This approach was taken to allow for delayed writes (expensive) to ensure that reads take precedence. Failed writes are re-queued within the subscription to be attempted again based on the retry rules… Eventually, messages/requests that exceed a defined failure limit will be “dead-lettered” along with any errors related to them. At the current time, there is no active monitoring of the dead-letter queue, although, it should be monitored regularly as part of any production enabled process.

See Operation documents for more details.

Service Provider interactions #

New notifications added to the system (via the AddNotification API method) can be optionally integrated with external delivery providers - email or SMS. If a provider is specified, a new copy of the message is queued on the appropriate provider send queue. A queue is used for similar reasons to the Azure Topic earlier during write operations: it provides isolation of functionality, throttling of send requests, combining requests as appropriate, guaranteed at least once delivery, and duplicate detection and removal. It also enables an efficient bulkhead and circuit breaker behavior if the external provider is not available at any given time. As before, the queued send request is picked up and processed by an Azure function dedicated to a specific provider for formatting and forwarding. Any primary keys obtained when messages are sent to the provider are updated along with the message details in the repository.

An additional piece of functionality is also exposed that enables external delivery providers to send status updates on a given message back to the notifications service in realtime via a web-hook rather than initiating an expensive polling option within the service. Provider-specific status codes and enumerations are translated into standard enumerations for tracking as part of the service (sent, delivered, read, undeliverable, deleted).

At the current time, this codebase leverages two providers - Twilio for SMS and SendGrid. Both providers are strictly in a trial mode and you will need to register for an account to utilize this functionality. Different providers can be leveraged without fundamentally altering the notifications system as all interactions are done through EventHub messaging. The provider specific azure function used for inbound or outbound processing would be the only items needing to be changed.

Repository #

The data store (repository) for the notifications service utilizes Azure SQL as a Service. This was chosen because of a number of benefits including: automated backups, dynamic clustering based on load, performance, availability across regions, guaranteed consistency, and cost considerations (about 30% the cost of a dedicated VM-hosted MSSQL Server). The database consists of standard relational data structure using foreign key relationships between the data items. All items use database generated UUID’s (Universally Unique Identifiers) in the standard 8-4-4-4-12 format. All operations against the database are executed through parameterized stored procedures and no direct table or view access is enabled.

See Repository documentation for more details.

NSG’s (Network Security Groups) #

Each of the primary regions of this service are separated into their own NSG. This provides security isolation and also locks down access to any specific item in an NSG. None of the Azure functions are directly accessible by anyone outside the service boundary - the only way to call them is via the securely exposed interface within the API Management Service (Gateway). Additionally, the database is only accessible from the API NSG - thus preventing any access from anywhere else in the environment. In addition to the obvious security benefits, this also ensures that there will be no inadvertent integrations occurring at the data level.

The only entry points into this system are via those exposed through the API Gateway.

Configuration #

While the code in the system provides a configuration object, it is merely a convenient wrapper around reading environment variables deployed as part of the Azure functions. This approach was taken because environment configuration values can be created and inserted at deployment time and are not needed at development time. This helps ensure that no secrets or keys become part of the code-base and are visible in any code repository. Within Azure functions and many other server-less components, utilizing the environment variables is the preferred, and, in some cases, the only option available.

Unit Testing will require that keys are injected at test time to validate functionality relying on keys. This can be done simply by having a pretest funtion that sets the environment variables such as:

process.env.[settingname] = [value];

//example

process.env.mysetting = 123;